Turing Machines explained from the ground up

I gave a talk at ConvergeSE 2012 on this topic so I thought I'd write it up as a blog post as well. That said, let's jump right in.

Turing

So the obvious thing to start with is Alan Turing, the man for which Turing machines are named. Turing was a British mathematician sometimes called the "father of Computer Science." I call him a mathematician because he did computer science before there was a discipline so named. He was influential in several fields, including AI and cryptanalysis. Specifically, he worked at Bletchley Park during World War II where he worked on breaking communications encrypted by the German enigma. His first major achievement was a paper written in 1936, before he had obtained his Ph.D., proving that the Entscheidungsproblem had no solution.

The Entscheidungsproblem was proposed by a very famous mathematician named David Hilbert in 1928. Simply put, the question is whether or not an "algorithm" could be devised to determine if a statement in first-order logic is universally valid. To accomplish this, Turing devised a theoretical machine that he used to answer this question in the negative. The machine became known as a Turing machine.

Background

In order to understand this machine, we need to start with some terminology. Most of these have roots in language, so they'll seem familiar. First, we have an alphabet. This is simpy a set of symbols. For example, we have the set of lowercase roman letters, denoted: {"a", "b", "c", ..., "z"}. Another example, that we'll use later, is the binary alphabet or {"0", "1"}. We can represent any number with just these two symbols.

The natural thing to do with a set is to combine the elements into a sequence of symbols. This construction is called a string. Some examples from the binary alphabet are "0", "0101001", and the empty string. So, to reiterate, zero or more symbols from an alphabet gets you a string.

Finally, a formal language is a set of strings "over" an alphabet. For example, we could define a formal language of metasyntactic variables: {"foo", "bar", "baz", "quux"}. The alphabet here is taken to be the lowercase roman letters, as before. Another example is the two-digit binary numbers: {"00", "01", "10", "11"}. Notice that both of these sets are finite but this doesn't have to be the case. Consider the alphabet {"a", "b"} and the formal language {"b", "ab", "aab", "aaab", ...} which is the set of zero of more "a"s followed by a single "b". You could write this more compactly as a*b but more on that later.

Finite Automata

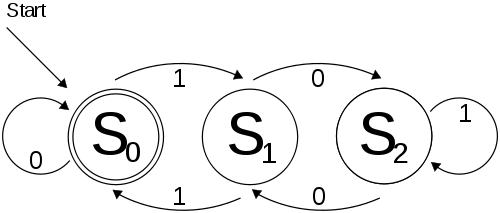

Now for the fun stuff: Deterministic Finite Automata, also known as DFAs or just finite state machines. These simple "machine"s are defined by an alphabet, a set of states, and a transition function. The alphabet defines the valid symbols that the machine can take as input. One of the states is defined as the start state and one or more are defined as accepting states. The starting state is pretty obvious, it's just the initial state of the machine. The accepting states determine whether or not the machine "accepts" the input it was given. The most interesting part, though, is the transition function. It takes a symbol, from the input stream, and the current state the machine is in and returns the new state we will transition to. Here's a graphical representation of a DFA that accepts binary strings that are a multiple of 3:

The notation requires some explanation. The circled values represent states with the start state denoted by an arrow pointing to it and the accepting states denoted with double circles. The arrows leaving and entering the states are the transition. Taken all together, they define the transition function for this DFA. The arrow from state S_0 to S_1 with the label "1" means that if we are in state S_0 and we read a "1" on the input stream then we should transition to state S_2. Furthermore, just from the diagram we can infer that the alphabet here is {"0", "1"}. This is because DFAs must have a leaving arrow for each symbol on each state. This is the deterministic property.

It will really help cement the concept if you run through a few examples. Consider the input "00". We start at state S_0 and see a "0" so we stay in the same state. When we see the second "0", we again transition to state S_0. Since we're out of input at this point, we consider whether or not we're in an accepting state. It turns out we are, which means that the machine has accepted the input. In this case, the machine is saying that "00" (0 in decimal) is a multiple of 3. Since 0*3=0, we can see this is true. Consider the input "1". In this case, we end up in state S_1 which is not an accepting state. Therefore, the machine rejects that input. This makes sense because there is no (integer) value such that x * 3 = 1.

Now, consider what would happen if we relax the deterministic constraint. This would mean that the transition function can now return zero or more states. In other words, we can have no transition out of a state at all (a sink), a single transition (as before), or multiple transitions. In the final case, we're effectively allowing the machine to split itself into how ever many transitions there are. This effective gives us a tree of automata. Obviously, this gives us quite a bit more expressive power.

These machines are called nondeterministic finite automata or NFAs. Lets look at an example:

This machine is using the same alphabet as before and has only two states, p and q. Here, the starting state is p and q is the only accepting state. Notice that when in state p and seeing a "1", we simultaneously stay in the p state and also move to the q state. You can think of this as multiple universes or cloning the machine so that we keep track of all possible paths. It turns out that this machine accepts any binary string that ends with a "1".

Surprisingly, NFAs and DFAs are equivalent in expressive power. That is, any NFA can be converted into a DFA that accepts the same string. So even though we allow non-determinism, we can still convert it into an equivalent, but generally larger, DFA.

Regular languages

The set of strings that a finite automaton accepts is called it's language. Conversely, a regular language is any language that can be recognized by a finite automaton, either deterministic or not. Even more interesting is that a language is regular if and only if some regular expression describes it. What this means is that regular expressions and DFAs have the same capability in describing languages. So we can convert from a DFA into a regular expression and vice versa.

Turing Machines

Finally we're ready to describe Turing Machines. They are just a small step up in complexity from the finite automata we just looked at. We now have a name for the stream of input, the tape. Turing machines can read/write to/from the tape as well as control it's movement. So, now we need two alphabets: the input alphabet and the output (or tape) alphabet. We still have a set of states, except that now we will have one starting state, one accepting state, and one rejecting state. There will still be a transition function, except that now it takes a state and a symbol from the current position on the tape and returns a new state, possibly a symbol to write, and a direction to move (either left or right). Notice that we don't have to write a symbol, but we do have to move.

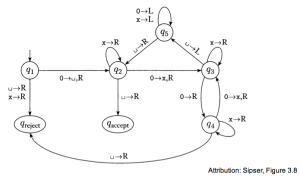

Lets look at an example Turing Machine. We'll name it M for machine. It's going to have an input alphabet of {"0"} and a tape alphabet of {"_", "x"}. The machine will accept "0" strings whose length is a power of 2. That is, string's whose length can be expressed as 2^x for some integer x. For example, "0", "00", and "0000" are the smallest strings that should be accepted because they are of length 1 (2^0), 2 (2^1), and 4 (2^2), respectively. To be clear, the machine would reject strings like "000" and "00000".

This description should look familiar. The labels on the transition arrows has gotten a little more interesting. They are in the form "symbol -> [symbol,] direction" where the first symbol defines when this transition is applicable, the second symbol is optional and defines the symbol to write to the tape, and the direction is either L or R for moving the left or right on the tape.

So if we imagine the tape with a "." at the current position, the transition of states for the input "00" goes something like this:

You should try "running" the machine on other inputs, making a table similar to the one above. Basically, the procedure is to mark off half of the 0s on each pass. If we run out of zeros during the intermediate stage, then we know we should reject. Otherwise, we accept the string.

Once you get comfortable with what this machine is doing, you'll notice that each state has a specific purpose. For example, the state q1 marks the first 0 with an _ so that it knows when we're at the beginning of the string. States q2, q3, and q4 are doing the builk of the work, marking through the "0"s with "x"s and moving the tape. State q5 is a reset procedure, moving us back to the beginning of the input.

Conclusion

So why do we care about Turing machines? First of all, they define what an algorithm is, in more concrete terms. This is relatd to the Church-Turing thesis and what it means to be "computable." It also turns out that they are equivalent in power to any other reasonable computational model. This is fairly surprising considering how relatively simple they are. They represent the essence of what it is to be a "computer."

References

Deterministic finite automaton. (2012, March 11). Retrieved from http://en.wikipedia.org/wiki/Deterministic_finite_automaton

Nondeterministic finite automaton. (2012, April 20). Retrieved from http://en.wikipedia.org/wiki/Nondeterministic_finite_automatonPetzold, C. (2008). The annotated turing. Indianapolis: Wiley Publishing, Inc.Sipser, M. (2006). Introduction to the theory of computation. (2nd ed.). Boston: Thompson Course Technology.Turing machine. (2012, April 17). Retrieved from http://en.wikipedia.org/wiki/Turing_machine